5 Reasons AI Answer Engines Ignore Your Site (& How to Get Cited)

Why ChatGPT, Perplexity, Google AI Overviews, Gemini, Claude and others are not citing your website?

Here’s the uncomfortable truth I’ve learned the hard way: if AI answer engines aren’t citing your site, it’s not because your content is “bad.” (It could be.) It’s because, to these systems, you’re either invisible, ambiguous, or not the most convenient evidence to use in an instant answer.

I’ve been obsessing over this for 7 months. In a recent conversation with my friend from GrowthX, Chandan (an SEO who lives in crawl logs the way the rest of us live in Slack), we discussed why Google’s AI Overviews, ChatGPT, and Perplexity skip some sites.

We also talked tactics that actually move the needle when it comes to AI Citations (Reddit presence, entity-first content, fixing crawl waste, and yes… making peace with the zero-click future.)

Let’s see what’s really going on, then I’ll give you a practical way (a way that has worked for me, not some theory) to become “cite-able.”

Before we start, Key Takeaways (or TL;DR):

Be Present on UGC Platforms: AI engines trust and have data deals with platforms like Reddit. Your presence there is a major signal.

Structure Content as Answers: Use clear definitions, data points, and expert quotes to make your content easily "quotable" for AI.

Become a Clear Entity: Use Schema markup (

Organization,Product) and entity-first writing to eliminate ambiguity for crawlers.Fix Technical Crawl Issues: Eliminate 404s and slow page loads, as these make your site ineligible for consideration.

Build Authoritative Mentions: Citations from reputable domains act as a strong trust signal for AI systems.

Disclaimer: For all intents and purposes, AI-Answer Engine = ChatGPT, Gemini, Google AI Overviews, Claude, Grok, and other LLM chats.

1) You’re not present where answer engines “listen”

All AI Answer Engines lean heavily on UGC (user-generated content) and developer Q&A for timely, specific claims (which means Reddit and Stack Overflow). There’s a reason for that: both have formal deals with the biggest AI players.

OpenAI has a partnership with Reddit that gives ChatGPT structured, real-time access to Reddit content; Google also made a licensing deal with Reddit earlier, valued at around $60M/year, to use Reddit content in training and Search.

which loosely translates to: if your brand never gets mentioned in high-signal UGC ecosystems, you’re missing a crucial marketing channel that answer engines already trust and can ingest easily.

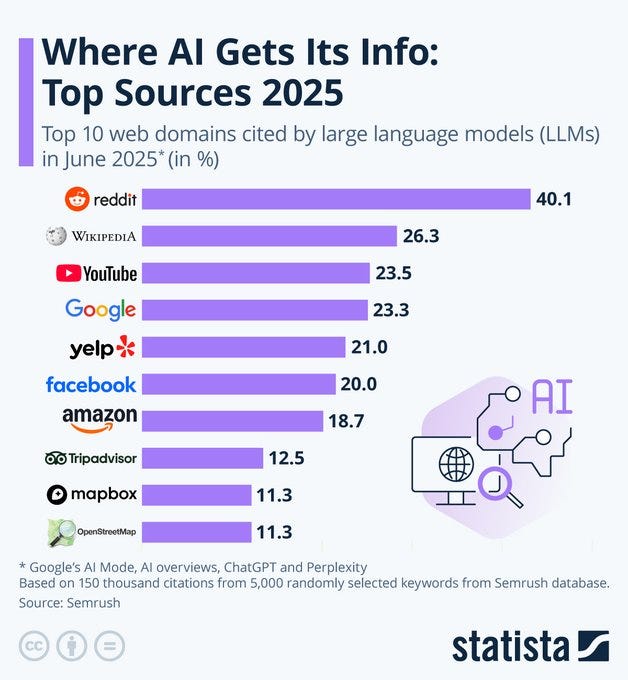

According to this Statista analysis, AI assistants frequently cite Reddit threads, no surprise given the licensing and freshness.

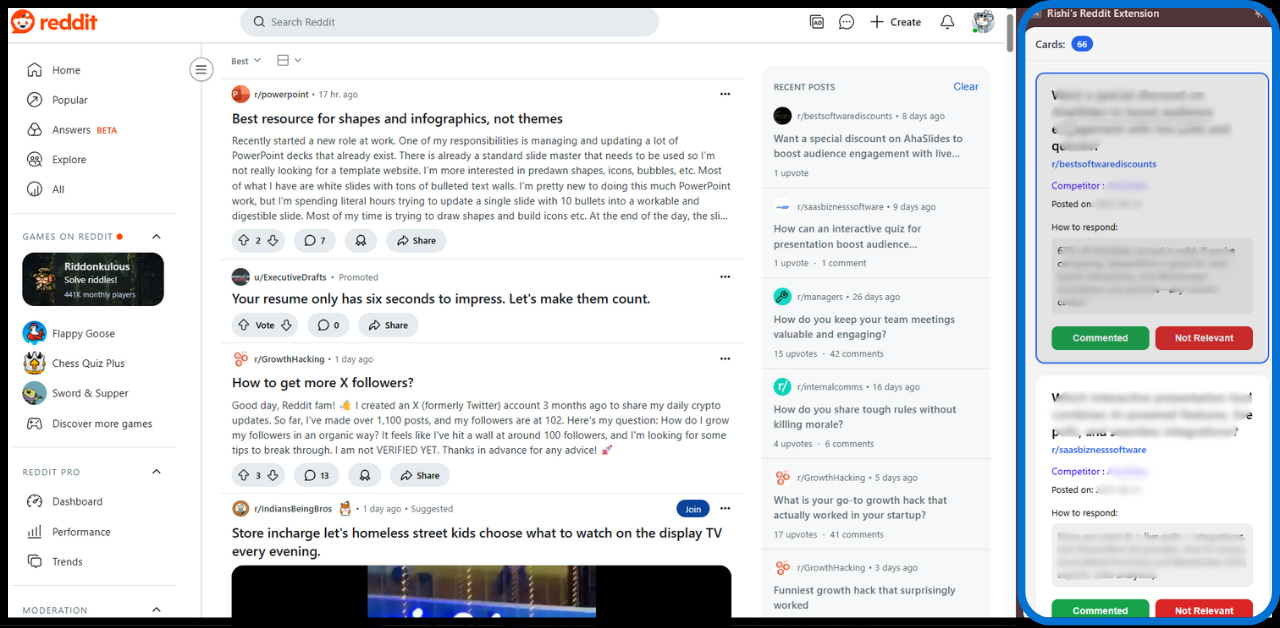

So, here’s what I did about it → I built a lightweight n8n workflow that monitors targeted subreddits, keywords, and specific competitor handles, then nudges me (via a side-panel chrome-extention) into relevant discussions.

I comment like a human (no links at first, no salesy tone, and I also engage outside my niche so the account looks normal), basically the “be present, be useful” play.

That presence seeds future citations without tripping spam alarms.

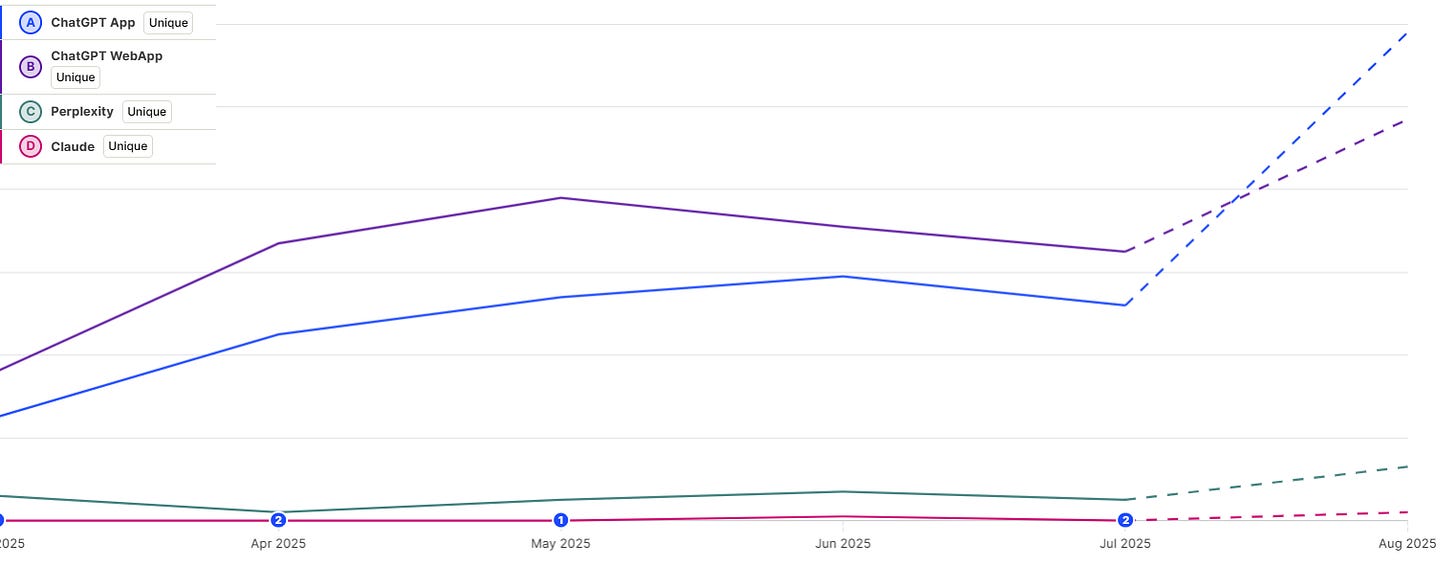

And here are the unique traffic flowing in:

2) Your pages aren’t “answer-shaped”

Answer engines love content that’s easy to quote and verify: clear definitions, specific claims with numbers, and named experts. Google’s own guidance for AI features in Search is boring but important: the same SEO best practices still apply, and the system surfaces a diverse range of sources that are easy to understand and verify.

Also see what Google itself says about it: → Find information in faster & easier ways with AI Overviews in Google Search

If your article jumps straight into tactics with no definition, no data point, and no external reference, it’s harder to justify citing you.

So how do we change it? We make our content cite-able:

Open with a crisp definition and a stat from a credible report (and link it).

State a specific, testable claim (e.g., “X reduced setup time by 30%”).

Attribute it to a person and/or dataset. (like Statista)

When your content makes verifiable statements (and backs them up), it’s easier for AI systems to lift a sentence and display your site as a source.

3) You’re an entity blur, not an entity

LLM answer engines resolve the web into entities (things with attributes and relationships). If your brand/product/person/topic isn’t expressed as an entity with clear attributes, you’re harder to match in retrieval and summarization.

The practical move from that would be to:

Add Organization and Product schema, fill in

sameAs(official social profiles), and keep your logo/name consistent. This helps both Search and AI Engines disambiguate (clarify) you.(Check this for more info on Structured Data → Intro to structured data markup in Google Search)

Use entity-first writing: name the entity, list its attributes and values in plain text. Entity-centric content makes extraction easier and reduces ambiguity for AI. If you’ve heard “semantic SEO,” this is the practical core.

For example, StreamAlive →

type: audience engagement tool;

use case: live polls, magic map;

integrations: Zoom/Teams/etc.

4) Technically, you might be ineligible today

In one sentence, if the search bots struggle to fetch, render, or understand your pages, it won’t cite you.

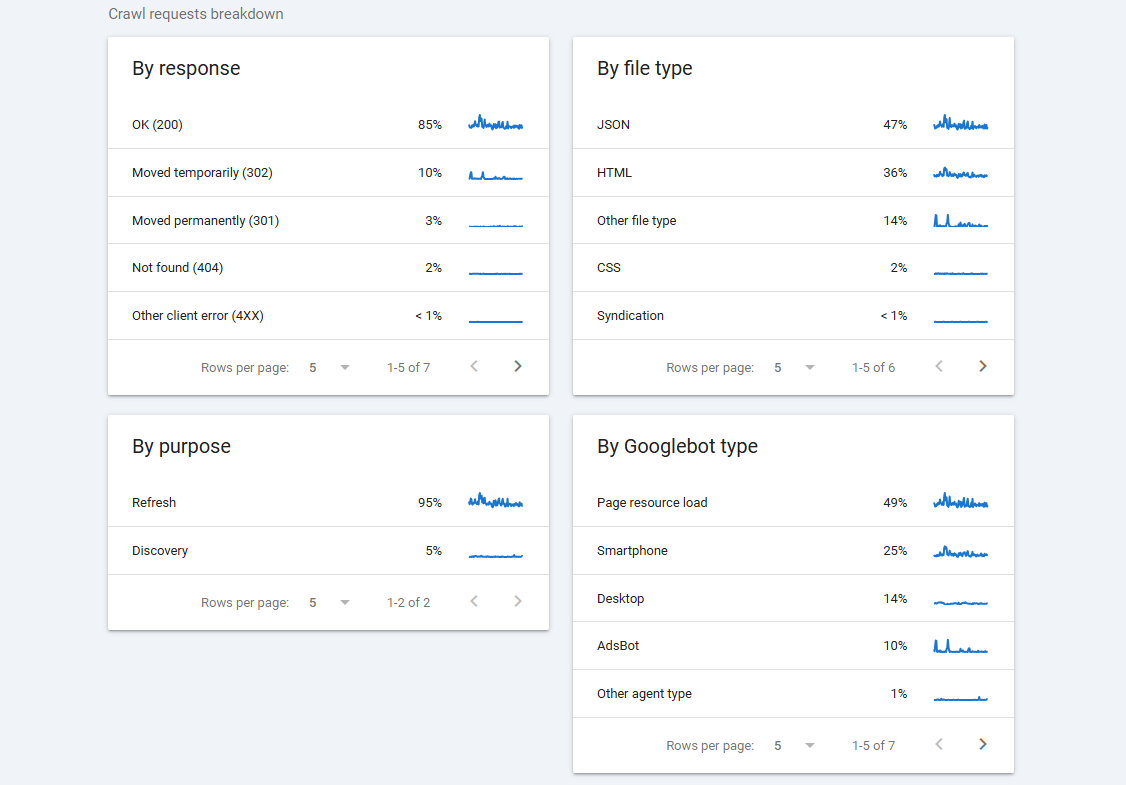

A few technical gotchas I saw surfaced while screen-sharing crawl stats in Google Search Console.

Crawl waste: Too many 404s and soft 404s siphon crawl budget. Google also says that 4xx URLs are dropped from indexing; both 404 and 410 tell Google “this is gone”.

According to John Mueller: “A 410 will sometimes fall out a little bit faster than a 404.” Which means if you want Google (and ofcourse other search engines) to remove pages, you should make it 410 instead of 404, and it will not harm much of your SEO.

This is what search engine journal has to say about it.

Discovery vs. Refresh in Search Console: Discovery = new URLs fetched; Refresh = previously known URLs recrawled. If refresh dominates and discovery is low, you’re publishing too little (or crawling is throttled). Use the Crawl Stats report to diagnose. Your Discovery should be higher, and Refresh should be lower. It tells answer engines that your website is fresh.

Server responsiveness: Slow TTFB (time to first byte) and heavy JS delays rendering, which is bad news for both classic indexing and answer engines that need to extract fast. Google’s AI features guidance also says the same things again and again: stick to core SEO quality practices.

Fixing this isn’t glamorous, but when Googlebot (or any fetcher) can reliably parse your content, it becomes eligible evidence for AI answers.

I should not be showing you this, but here’s the crawl stats report of the blog you’re reading (productgrowth.blog)

PS: You can check your crawl stats here in Google Search Console → Settings → Crawl Stats → Open Report.

5) You don’t look “worth citing” yet

Citations are a trust play. If you’re never referenced by authoritative domains (like reputable news and category leaders), answer engines have less reason to rely on you. Which means backlinks don’t matter as much as getting cited by other websites with good Domain Authority.

These off-page signals raise your odds of selection when an AI needs a clean, representative source.

For more context on this, read this report by Ahrefs.

How I’m tackling this week by week → A practical playbook

Seed the UGC graph (ethically).

Pick 3–5 subreddits where your users hang out. Participate daily without links. Answer questions with specifics, drop your brand name naturally when relevant, and only start linking once your karma hits 100+ and looks organic. This matches how answer engines source “real people” opinions and tools.

Make “answer-shaped” pages.

For every core topic, add a short definition, a stat with a citation, and one expert line. Keep it scannable but grounded in references. Google’s AI features page is clear that standard quality and clarity still matter.

Become an entity, not a phrase.

Add Organization/Product schema (JSON-LD), sane sameAs links, and consistent naming. On the page, write in an entity-attributes-values cadence so extraction is trivial.

Clean crawl waste.

Kill dead pages with proper 404/410s (410 can drop a bit faster and get cleanly removed), fix soft 404s, and watch Crawl Stats for Discovery vs Refresh. You want a healthy flow of new content (discovery) and efficient refreshes.

Pursue “authority” mentions.

Two quality news mentions can outweigh twenty blog swaps. Even Perplexity is experimenting with compensation and publisher programs, signaling that publisher relationships matter more each quarter.

Expect fewer clicks, and optimize for the ones that remain.

When you do appear under an AI answer, your title, favicon, and meta need to carry more weight than before. The pie is smaller; fight for a bigger slice with unmistakable value props and clear next steps.

Here’s what I’ve changed in our own content.

Stop skipping the basics. We’re tightening internal links around entity hubs, rewriting openers to start with definitions + data + a named expert, and fixing response times that were creeping north of what bots love.

We’re also re-publishing a few “how-to” guides with clearer attribute/value language so our product features are extractable at a glance.

On the off-page side, I’m doubling down on helpful comments in live threads (no naked links) and pitching one solid, data-backed story to an industry publication each month.

I don’t expect a single “hack” to flip citations overnight. This is compounding work. But if you become easy to fetch, easy to understand, and easy to trust (across both UGC ecosystems and your own site), you give AI answer engines no excuse to ignore you.

Get a Free AI Citation Analysis

Is your website being ignored by AI? Drop a URL in the comments below with the main query you want to be cited for. I'll personally review it and send you a full analysis, including active Reddit threads you can join.