How Anthropic and Claude.ai Hacked Growth – Can you Adopt anything From It?

From stealth to $60 B: the growth hacks behind Anthropic & Claude.ai that every AI startup can steal

Anthropic’s rise from a stealthy OpenAI spin-off in 2021 to a roughly $60B+ valuation by 2025 is a legend.

Their growth playbook was big bets on credibility and partnerships, not chasing consumer hype.

Today, we unpack exactly how Anthropic (and its Claude models) grew so fast, with data and examples.

Claude Snapshot

Anthropic PBC is an AI safety and research startup (HQ in San Francisco) founded in 2021 by former OpenAI researchers – notably siblings Dario and Daniela Amodei plus Jack Clark, Tom Brown, and others.

As a public-benefit corporation, Anthropic explicitly prioritizes “safe” and “steerable” AI – its own site says it “researches and develops AI to study their safety properties at the technological frontier” to deploy more reliable models.

Their flagship product line is the Claude family of large language models (Claude, Claude 2, Claude 3, etc.), positioned as a competitor to OpenAI’s ChatGPT and Google’s Gemini.

In short: founders from OpenAI, SF-based, mission “AI aligned with human values”, product = Claude (next-gen assistant).

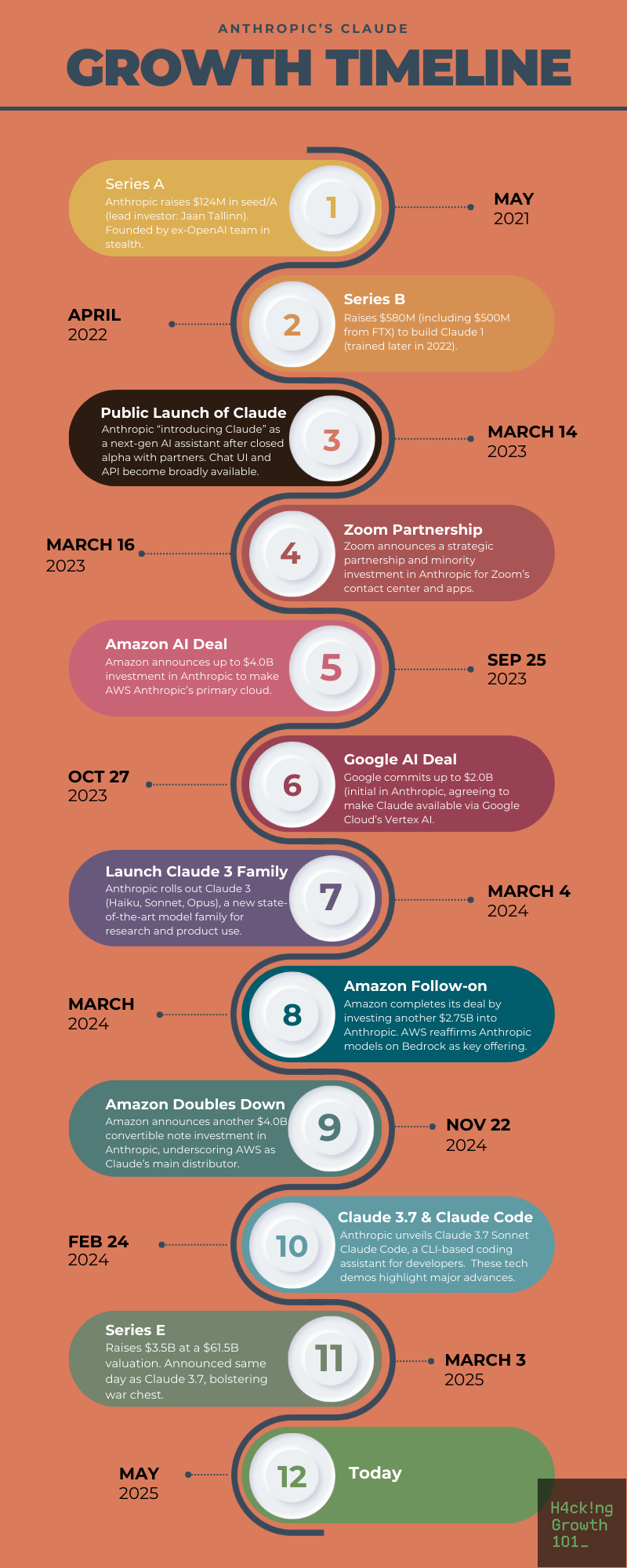

Timeline of Key Milestones (2021–2025)

Growth Lever #1: Distribution-First Partnerships

Rather than chasing consumer virality, Anthropic rode on the coattails of industry giants. Their strategy: embed Claude into big platforms so customers find them instead of the other way around. Key examples:

Amazon Web Services (Bedrock) – From the $4B AWS deal, Anthropic made AWS its primary cloud. Claude became available on Amazon Bedrock, and AWS’s own customers got early access. As AWS SVP Matt Garman put it, “our customers will be able to access Claude … to innovate on AWS”. In practice this meant enterprises using AWS could pick Claude alongside other LLMs in Bedrock. (By the end of 2024, hundreds of companies on AWS had deployed Claude via Bedrock, from financial services to healthcare.)

Google Cloud (Vertex AI) – Right after Amazon’s splash, Google announced its $2B investment and integrated Claude into Vertex AI. Anthropic models appeared as options in Google Cloud’s AI platform, making it easy for users already on GCP to test Claude. In other words, Anthropic piggy-backed on Google’s cloud sales channels.

Zoom AI Companion – In May 2023 Zoom not only invested in Anthropic, but also said it would weave Claude into Zoom’s products (contact center, team chat, whiteboard, etc.). Zoom’s CPO Smita Hashim praised Claude’s “responsible and safe” output, and CEO Dario Amodei noted Zoom’s federation approach to AI. For Claude, Zoom meant millions of enterprise seats potentially touching Claude in 2024.

Notion and Other Apps – Anthropic partnered with productivity apps like Notion. (Notion’s own case study shows embedding Claude powered advanced search and auto-summarization in their workspace, cutting query times by ~35%.) By plugging Claude into established tools, Anthropic got usage spread through pre-existing user bases.

Slack Integration – They even released a Slack app (“Claude for Slack”) so teams could chat with Claude in their workspaces. (Notably, the integration assures companies it won’t train on their Slack data by default.)

Other Partners – Salesforce Ventures joined as an investor (hinting at links to Salesforce’s Einstein AI), and Amazon’s own Alexa now uses Claude in Alexa+ products. In short, Anthropic’s distribution playbook was: get embedded into the platforms where your customers already live. The result was hundreds of enterprise customers consuming Claude behind the scenes of bigger products, rather than Anthropic having to find end-users itself.

Growth Lever #2: Safety & Governance Moat

A huge part of Anthropic’s pitch is “we’re the safe, enterprise-friendly choice.” They leaned into this as a competitive advantage (especially against a more “move fast” OpenAI). They’re legally a public-benefit corporation (PBC) with an independent board – explicitly to enforce safety over pure profit. They even published a 22-page “Responsible Scaling Policy” to prove they’re thinking hard about AI risks. In interviews, co-founders emphasize that Anthropic’s DNA is built around ethics: a former Anthropic exec told BI, “Anthropic’s safety-first policy is one of its main differentiators”.

Enterprises pick up on this. For regulated industries, Anthropic didn’t just hand customers an unfiltered model – they made real-world tools with strict guardrails.

For example, Anthropic teamed with Accenture and AWS to customize Claude for regulated industries (healthcare, finance, government).

DC’s Department of Health CIO Andersen Andrews said they built a “Knowledge Assist” chatbot (powered by Claude on AWS Bedrock) to answer public queries in English and Spanish. He noted it gives precise, context-aware answers to citizen questions on health programs, increasing engagement. In insurance underwriting, Accenture and AWS showed off Claude-based workflows driving speed and consistency.

Anthropic’s own CEO Dario Amodei said it best: “Our focus on model performance and safety, combined with AWS’s security and Accenture’s industry expertise, is building tailored solutions that enable key use cases”.

In short, clients in health, finance, legal, and government felt comfortable choosing Claude because Anthropic had a “safety moat” – both in messaging and in technical design (e.g. “constitutional AI” training).

Even on privacy and data use they took pains. Anthropic claims it doesn’t train on user inputs by default, a swipe at other labs. Their Slack app explicitly assures companies: “Anthropic does not use this data to train models”. These policies – and the very fact of having them – helped CIOs trust Anthropic with proprietary data. (Security experts do warn that any external LLM entails risk, but Anthropic’s stance at least addresses the immediate fear of “Will this leak my data or train a future model?”.)

In practice, having a safety/governance story meant Anthropic got deals like Amazon’s and joint announcements with AWS/Accenture highlighting compliance for regulated sectors. It also let them cultivate a corporate image of “responsible AI,” which resonated with budget-holders more than another consumer chatbot ever would.

Growth Lever #3: Wow-Moment Tech Demos

Even with an enterprise focus, Anthropic wowed the tech press and developer community with flashy demos that showed Claude’s chops. For example, its “hybrid reasoning” demo in early 2025 was a showstopper: Claude 3.7 Sonnet can think step-by-step on demand, exposing its chain-of-thought to the user so you see how it’s reasoning.

And then there’s “Claude Code”. In Feb 2025 Anthropic released a CLI-based “coding assistant” that lets developers hand command-line tasks to Claude. Suddenly Claude wasn’t just a chatbot; it was a terminal pair-programmer.

This demo went viral among developers (on forums and Twitter, many shared screenshots of Claude writing or debugging code). It underscored Claude’s strength on programming tasks – which Anthropic also touted in their blog with metrics (e.g. “Cursor noted Claude is best-in-class for real-world coding tasks”).

The effect of these demos: they gave headlines like “Anthropic rolls out 1-million token context window” or “Claude as GitHub Copilot, but more”, generating buzz in tech media. Even if this didn’t directly sell to enterprises, it built credibility.

It showed customers (and press) that Anthropic was not a copycat but an innovator. The wow factor of 128K token contexts and an AI coding CLI made even skeptics sit up and say, “Wow, Claude really can do some things ChatGPT can’t.” It also helped recruit talent – smart AI engineers like to join a team breaking records.

Plus, it gave startup-watchers something to tweet about when the name “Claude” came up in AI circles.

Growth Lever #4: Developer-Centric Go-To-Market

Anthropic treated developers like king. From day one they offered a free tier on Claude.ai and a frictionless API, so any startup or dev could play with Claude and build proofs-of-concept. They backed this up with polished docs and tooling. The Anthropic API docs emphasize trustworthiness and user-friendliness, touting Claude as “the most trustworthy and reliable AI available” that helps enterprises build safe applications.

They also created community momentum. In April 2025 Anthropic announced “Code with Claude”, a first-of-its-kind developer conference (SF, invite-only) focused on real-world Claude use cases. This one-day event promised interactive workshops, direct access to product teams, and sessions on their new Model Context Protocol (MCP) and agent tools. Initiatives like hackathons (e.g. “Build with Claude” events) and partnerships with startup incubators also seeded a developer ecosystem.

For example, they gave out API credits at hackathons and ran online workshops teaching developers how to use Claude. The strategy was clear: get developers hooked, let them showcase Claude in projects, and let word-of-mouth spread.

All this dev-focus paid off. Enthusiastic developers were posting comparisons (“Claude vs ChatGPT”), building internal demos for their companies, and even telling CIOs “hey, I tested Claude, it works great.” It also meant that when enterprise salespeople called around selling Claude, many companies already had internal champions.

Growth Lever #5: Enterprise-Vertical Focus

Rather than going viral with consumer apps, Anthropic built for specific verticals. They sold Claude as a solution to real business problems. Legal and finance are big ones:

Thomson Reuters (through its CoCounsel tax research tool) uses Claude to help tax professionals draft answers.

LexisNexis includes Claude for legal research.

A number of financial services (like M1 Finance, Bridgewater Associates, Broadridge) signed up via AWS Bedrock.

In healthcare and public sector, clients like Novo Nordisk used Claude to automate regulatory report writing (reportedly cutting weeks of work to minutes).

The DC Department of Health chatbot is another concrete example.

In insurance, Anthropic teamed with Accenture to build underwriting assistants on Claude.

Even Salesforce put Anthropic investors on its board (and launched EinsteinGPT around the same time) – enterprise CRM/AWS are clearly paying attention.

By focusing sales and product development on industries, Anthropic avoided the red ocean of consumer chatbots. They developed specialized features for lawyers, accountants, analysts, etc.

Their sales teams targeted C-suite buyers with ROI stories (“10x productivity gains” from others’ use cases). In short, instead of trying to be the next ChatGPT for everyone, they staked out niches where companies needed big-custom AI assistants – law firms, banks, healthcare providers, governments – and delivered enterprise-ready tooling for those.

This vertical approach meant real paying customers (and referral cases) rather than fickle free users.

Results & Traction Indicators

How do we know this strategy “worked”?

Several data points show traction. On revenue, Anthropic is now in the billion-dollar club. Inc. Magazine reported that by early March 2025 Anthropic had roughly $1.4 billion in annualized recurring revenue (ARR). That implies ~$116M in monthly revenue. This is up from about $1.0B ARR at end of 2024 – a 40% jump tied to recent product improvements.

Inc. notes this revenue level roughly matched OpenAI’s revenue 17 months earlier, meaning Anthropic is climbing quickly in the AI revenue charts.

User and customer growth are harder to nail down with public data, but we have hints. Claude’s web and mobile apps reportedly have tens of millions of monthly users (some analyst blogs cite ~16–19 million MAUs early 2025, though caveat these are estimates).

The Alexa+ integration alone exposes Claude to millions of households. On enterprise, dozens of Fortune companies are test-driving Claude – Zoom, Snowflake, Pfizer, Novo Nordisk and others are explicitly mentioned as Claude users.

On AWS Bedrock alone, customers as varied as LexisNexis (legal), Bridgewater (finance), Cloudera (tech), and Delta Airlines are listed as Anthropic/AWS references. Internally, Anthropic’s own hiring and team size tell a story: from ~300 employees at the start of 2023 to roughly 800 by 2024, plus hundreds more likely by 2025.

Other traction signs:

Claude is built into products (like Zoom, Notion, CoCounsel) that collectively reach millions of end-users. It powers AI features in business software and developer tools. Its ARR growth (1→1.4B in a few months) and massive valuations clearly indicate customers are paying. And with each new funding round, Anthropic’s post-money valuation has ratcheted up (recently to $61.5B), reflecting investor faith in its metrics. In sum, revenue is exploding, and a growing roster of corporate customers are laying the groundwork for even more growth.

Takeaways for Startup Readers

Anthropic’s story offers a blueprint for founders in AI or any deep-tech:

Team up with distribution partners early. Don’t try to reach everyone yourself. Anthropic plugged into AWS, Google Cloud, Zoom and others so those channels could sell Claude on their behalf. Seek out platforms (cloud providers, enterprise apps, marketplaces) where your potential customers already are. Embedding your tech or co-marketing with incumbents can shortcut growth.

Differentiate beyond the obvious. Anthropic’s safety/governance angle set them apart. For your startup, lean into whatever advantage (policy, privacy, performance, cost) resonates with your audience. Make that part of your core messaging, so customers pick you for a reason, not just because your pricing is a hair cheaper.

Build for real use-cases, not just hype. Anthropic targeted industries (law, finance, healthcare) with custom demos and case studies. Identify niches where your solution solves an urgent pain. Get one or two marquee customers there and highlight those wins. Even if it’s not “sexy,” industry adoption shows substance.

Invest in developers. Anthropic’s free tier, docs, hackathons and even dev conferences created grassroots evangelism. Encourage early adopters to integrate and talk about your product. A helpful, well-documented API and community initiatives pay off in word-of-mouth and organic traction.

Show off your tech. Star performance earns attention. Anthropic’s demos (huge context windows, live coding assistant) generated free press and made people curious. If you have a killer feature or an X-factor (speed, accuracy, novel capability), highlight it with videos or interactive demos. It doesn’t replace sales, but it can prime the pump.

Next Steps

With $61.5B in valuation and a $1.4B+ ARR, they’re now focused on “frontier AI” and expansion. We see hints of what’s next: continuing to push technical limits (e.g. web search on Claude API, “AI for Science” initiatives), expanding multi-modal and agent capabilities, and internationalization.

Investors like Google and Amazon have only just begun tapping the thesis – Google is poised to invest another $750M this year, and Amazon is making Claude a centerpiece of AWS’s strategy. Anthropic may also eye an IPO down the line given its scale and public structure.

For founders, the Anthropic is a masterclass in how a startup can grow by being “boring” in all the right ways: enormous funding runway, deep tech demos, developer evangelism, and a laser focus on enterprise problems.

It shows there are multiple paths to success beyond consumer virality. If you’re building the next big AI tool or platform, consider how to apply these lessons: align with ecosystem giants, double down on your unique edge, and prove your value in real business use cases.

Do that, and like Anthropic’s Claude, your growth might just break $60B (or at least your sector) one day.